This piece will be divided into two parts. In this first section, I’ll walk through the technical case for a potential relative strength rotation from NVIDIA to AMD. In the second, I’ll unpack the underlying tech and business dynamics that could be driving this shift. With no further ado, let’s dive in.

Theme: Relative Strength Rotation – AMD Catching Up to NVDA

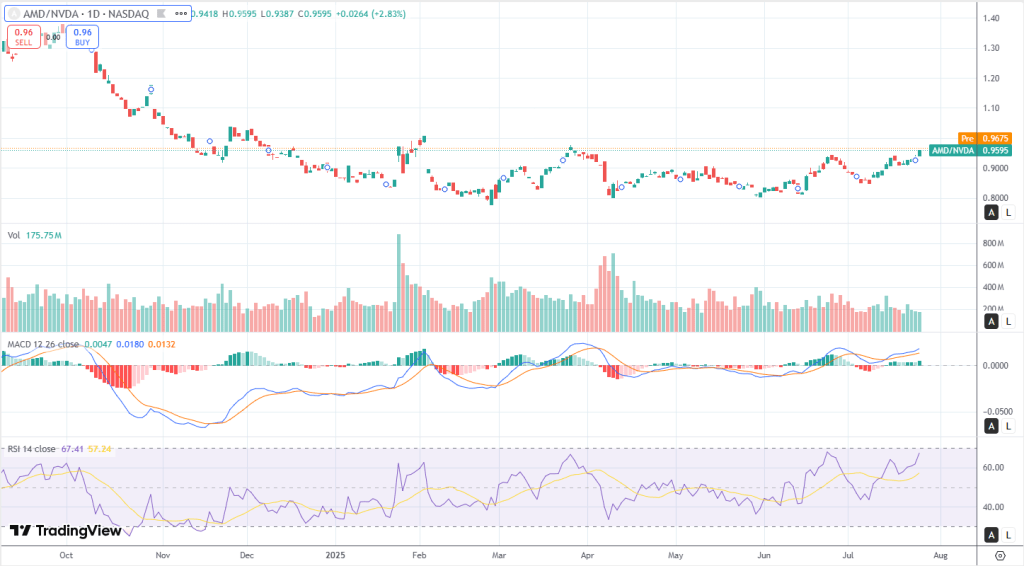

From a candlestick perspective, AMD’s recent price action shows clear signs of steady accumulation. We’re seeing a series of small-bodied bullish candles with rising closes—consistent buying pressure without signs of exhaustion. The latest candle features a tight upper wick, further suggesting that buyers remain in control. There’s no presence of reversal patterns—no dojis or spinning tops—and this setup resembles a rising three continuation rather than a blow-off top. It’s a clean, controlled move up.

Momentum is also constructive. The RSI is climbing at 67.41, nearing overbought but not yet in euphoric territory. MACD is rising with an expanding histogram—classic signs of trend acceleration without overheating.

From a Box perspective, AMD recently broke above a well-defined box in the $0.87–$0.93 range. The breakout above $0.93 was confirmed by multiple follow-through candles and a healthy MACD uptick. This confirms the breakout is being respected and is likely to be sustained.

Looking at Fibonacci levels, drawn from the March swing low around $0.80 to the July swing high at ~$0.9675, the key retracement levels to watch on any pullback are 23.6% at $0.9251 and 38.2% at $0.9026. However, if the breakout continues, extension targets come into play—127.2% at $1.00 and 161.8% at $1.0442. The $1.00 level is especially important—it represents both a fib extension and a psychological barrier, making it a natural test area.

Tactically, this setup represents a momentum breakout from a prior consolidation box, supported by rising momentum indicators and a clean candle structure. Key levels to watch include $0.93 as new support (the top of the old box), $0.902 as secondary support (38.2% fib), and resistance at $0.9675 and $1.00. The absence of shakeouts or exhaustion wicks suggests that institutions are gradually increasing exposure to AMD at the expense of NVDA—a controlled rotation rather than a hype-driven move.

Now, I’ll dig into what could be fueling this rotation beneath the surface—factors like ROCm traction, hyperscaler relationships, and AMD’s multi-year roadmap. Stay tuned.

AMD’s AI Strategy: A Platform Shift with Open Ecosystem Focus

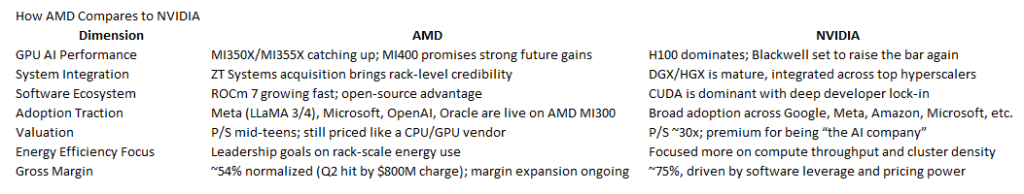

AMD’s AI strategy is no longer just about making faster chips—it’s about transforming into a full-stack platform company with an open ecosystem at its core. Central to this evolution is a clearly defined, multi-generational roadmap for AI GPUs. The MI300 series is already in production and seeing real-world adoption from hyperscalers like Meta and Microsoft.

Following that, the MI350 series (expected in 2025) targets massive performance leaps—up to 4x the compute power and 35x inference gains over its predecessor. Looking ahead, the MI400 series (2026) will push further into rack-scale integration, introducing system-level architecture optimized for Mixture of Experts (MoE) models under the codename “Helios.” This annual release cadence mimics NVIDIA’s own (e.g., Hopper → Blackwell → Rubin) and signals that AMD is serious about being a long-term AI compute contender.

A major part of this transformation is AMD’s move into full-system integration, echoing NVIDIA’s DGX model. Through the acquisition of ZT Systems, AMD can now deliver vertically integrated racks combining EPYC CPUs, Instinct GPUs, Pensando networking, and its ROCm software stack. This isn’t theoretical—deployments with Oracle and Microsoft confirm demand for turnkey AMD-based AI infrastructure.

On the software front, AMD’s ROCm 7 is finally showing signs of maturity. Once a liability compared to NVIDIA’s dominant CUDA, ROCm now supports over 2 million models on Hugging Face and offers day-one compatibility with major LLMs like Meta’s LLaMA 4 and Google’s Gemma 3. Regular biweekly updates and growing framework compatibility signal momentum, although CUDA still commands stronger developer mindshare. Still, ROCm’s open-source, cross-platform approach may gain favor as the industry looks for alternatives to proprietary AI stacks.

Lastly, AMD is positioning energy efficiency and total cost of ownership (TCO) as a differentiator. The MI350 platform has already surpassed AMD’s five-year goal of 30x energy efficiency, hitting 38x, and the MI400 targets a 20x rack-level improvement by 2030. While NVIDIA dominates through performance and ecosystem vitality, AMD is carving a parallel path that emphasizes sustainability and affordability, through openness in AI infrastructure.

Is AMD Catching Up?

The answer is yes, but with measured steps. AMD is clearly narrowing the gap by shifting from being a pure silicon supplier to becoming a full-stack AI systems platform, much like NVIDIA started doing several years ago. Key moves such as the expansion of the ROCm software stack and the acquisition of ZT Systems show that AMD is making serious investments in areas where it usually underperforms: software maturity and integrated systems. The multi-year GPU roadmap demonstrates a long-term commitment to the AI infrastructure market and should give more confidence in AMD’s trajectory.

That said, NVIDIA still maintains a considerable lead in several crucial areas. CUDA continues to be a dominant force, acting as a powerful moat locking developers. The upcoming Blackwell architecture is expected to deliver higher absolute performance, reinforcing NVIDIA’s edge in the marketplace. Moreover, NVIDIA benefits from greater pricing power and superior margins thanks in great part thanks to it mature ecosystem.

At this point, it becomes very important to identify a “canary in the coal mine” type of metric signaling a shift between AMD and NVIDIA. We need something that can capture real-time market sentiment, and paralelly, lead revenue trends, while being consistently trackable. I believe that a metric that can be up to the task is the year-over-year delta in data center revenue. This metric goes beyond measuring who’s growing; it reveals whether AMD is gaining ground in AI infrastructure.

Why does this matter? Because NVIDIA’s stock performance is almost entirely tied to its data center dominance, with nearly 90% of its recent revenue growth coming from that segment. AMD, meanwhile, is the only credible second-source provider in this high-growth market. If AMD begins to consistently close the gap, even if incrementally, it will likely signal to investors that its platform strategy is gaining traction. Such a trend could precede broader margin expansion and greater ecosystem adoption, which coul unlock a re-rating of AMD’s valuation. In other words, this data center revenue share delta could be the market’s earliest tell that AMD’s flywheel is beginning to spin faster than expected.

While the Data Center Revenue Share Delta serves as the key early indicator of AMD gaining ground in AI infrastructure, there are a couple of other metrics that can work as guides for our analysis. First, think ROCm ecosystem metrics. Growth in active developers and improved compatibility with major frameworks like PyTorch and TensorFlow will likely point to early signs of software traction. in my opinion, a stronger developer ecosystem will likely precede hardware adoption, hence the need to keep this under watch.

Second, monitor customer disclosures for named wins. If companies like Meta or Microsoft begin publicly stating that AMD GPUs are being used for real production workloads, that will likely mark a pivotal shift, offering tangible evidence that AMD is breaking through.

Lastly, I believe that keeping an eye on the gross margin expansion at AMD can also be of immense help. Sure, this is a lagging indicator, but if we focus specifically in the data center mix, it can still be a powerful signal for pricing power and improved scale.

Other Aspects to Consider

One often overlooked factor in the AI race is the deep interdependency between software, systems, and support. For NVIDIA, these elements come togheter with CUDA, DGX systems, and an ecosystem of tooling and developer support that provide a platform experience.

AMD, by contrast, still operates with a more modular approach. Its ROCm software stack, MI-series accelerators, and ZT Systems hardware are powerful in isolation but not yet fully integrated into a unified offering. Converging these pieces into a streamlined, end-to-end platform will be critical. That integration could determine whether it can meaningfully close the valuation gap with NVIDIA.

The maturity of an AI software ecosystem directly impacts both customer stickiness and long-term growth. NVIDIA’s CUDA is the gold standard here, but it took over 15 years to build. It’s deeply embedded from machine learning compilers to orchestration frameworks and automation tools. This level of integration creates enormous switching costs. Many may experiment with AMD’s offerings, but few are willing to commit fully, given how entrenched CUDA is in their workflows.

That said, AMD’s ROCm stack is making underappreciated progress. With biweekly updates, support for over 2 million Hugging Face models, and day-one compatibility with major LLMs like Meta’s LLaMA 4 and Google’s Gemma, ROCm is showing signs of serious momentum. However, a detail buried in recent filings is critical, ROCm still lacks full interoperability with some major orchestration platforms, such as Kubernetes, Ray, or PyTorch/XLA. This limits its ease of deployment across enterprise and cloud environments.

The hidden link here is strategic: stronger ROCm traction leads to better system sell-through, which ultimately drives margin expansion. Therefore, a leading indicator to watch is ROCm’s integration with platforms like Microsoft Azure or Google Cloud. Once ROCm becomes plug-and-play within these ecosystems, AMD’s AI story could enter a new phase of adoption and monetization.

Inventory and lead time management but in this context they often conceal deeper execution risks. NVIDIA operates in a supply-limited mode by design, carefully managing GPU availability to maintain pricing power and prioritize allocations to high-value customers. This creates strategic scarcity, and as a result, NVIDIA’s backlog is not just a measure of raw demand, but a proxy for demand quality.

AMD’s situation is more complicated. The company recently took an $800 million inventory charge related to U.S. export restrictions on MI308 shipments to China. At the same time, it’s already ramping up supply for its next-gen MI350 and MI400 chips, both of which have long production lead times. This move raises an important question: Is AMD prudently preparing for future demand, or is it getting ahead of itself? Without sufficient ROCm adoption or real-world workload utilization, there’s a risk that hardware will sit idle—driving up costs and compressing margins.

The key point here is that AI revenue isn’t the same as AI utilization. Until AMD can show that its GPUs are being used at scale in customer workloads—on par with NVIDIA’s systems—its revenue growth may exaggerate its true impact in the market. The critical, often overlooked link is that excess inventory or premature scaling can lead to underutilized infrastructure, turning strategic bets into margin headwinds.

Finally, customer mix plays a pivotal role in determining whether a company’s revenue stream is fragile or resilient. NVIDIA’s customer base is highly concentrated among hyperscalers and sovereign-scale infrastructure buyers. These relationships often involve long-term, multi-billion-dollar commitments, which provide revenue stability but come with concentration risk. If the AI growth narrative shifts toward smaller enterprises or decentralized use cases, NVIDIA may struggle to scale its dominance down into that fragmented middle market quickly.

In contrast, AMD is positioning itself to capitalize on that exact shift. While it’s still targeting top-tier cloud buyers, AMD is also expanding aggressively into commercial PCs, embedded systems, edge AI, and enterprise workloads, markets that are often more sensitive to total cost of ownership. Its MI300 and MI400 product roadmap is designed not just for cloud hyperscalers, but for broader adoption across industries like healthcare, telecom, and manufacturing. This diversification could allow AMD to capture market share where NVIDIA is underrepresented.

The hidden link here is strategic resilience. AMD’s broader exposure provides a natural portfolio hedge against any slowdown in hyperscaler spending. As AI moves beyond centralized data centers and into more distributed enterprise and edge applications, AMD’s customer mix could evolve into a key advantage.

Wrapping-Up

The recent breakout in AMD’s stock suggests that momentum is building, but it remains fundamentally discounted. The market is beginning to acknowledge AMD’s positive developments like steady GPU traction with products like the MI300 and MI325X, even in the face of geopolitical headwinds.

Technical signals, such as a MACD crossover and RSI rising above 70, reinforce bullish sentiment. This isn’t a speculative pop—it reflects real operational progress. Yet, AMD’s relative strength line against NVIDIA shows that this breakout is still occurring under the shadow of its larger rival. It aligns with the view that AMD is evolving into a platform company, but the market continues to price it like a component supplier.

The modest relative strength also implies that the market is waiting for more tangible proof. Despite ROCm’s progress and reported wins with major hyperscalers, AMD has not yet matched NVIDIA’s full-stack grip—CUDA, integrated hardware-software ecosystems, and developer dominance. For the valuation gap to truly close, investors may need to see more concrete wins: MI350 or MI400 outperforming expectations, a clear ramp in ZT Systems deployments, or ROCm gaining traction as a preferred platform among developers. Until then, skepticism around platform stickiness will likely persist.

Importantly, this breakout signals optionality—not clear market leadership, at least not yet. The price action opens the door to a potential re-rating cycle, reminiscent of NVIDIA’s surge in 2023, but AMD hasn’t hit that level of escape velocity. The current move reflects growing belief in AMD’s strategic roadmap, but the market still needs validation through results and adoption metrics before fully embracing the AI platform thesis.

Finally, AMD remains well-positioned within a powerful thematic tailwind. Indicators like RSI and MACD are not only technically bullish but also aligned with the broader AI infrastructure build-out story. AMD continues to benefit from macro trends even if it’s moving at a slower pace than NVIDIA. As long as execution continues, AMD should ride this wave higher, with further upside potential if optionality starts converting into realized performance.

Disclaimer: LONG AMD. This text expresses the views of the author as of the date indicated, and such views are subject to change without notice. The author has no duty or obligation to update the information contained herein. Further, wherever there is the potential for profit, there is also the possibility of loss. Additionally, the present article is being made available for educational purposes only and should not be used for any other purpose. The information contained herein does not constitute and should not be construed as an offering of advisory services or an offer to sell or solicitation to buy any securities or related financial instruments in any jurisdiction. Some information and data contained herein concerning economic trends and performance is based on or derived from information provided by independent third-party sources. The author trusts that the sources from which such information has been obtained are reliable; however, it cannot guarantee the accuracy of such information and has not independently verified the accuracy or completeness of such information or the assumptions on which such information is based.

Leave a comment