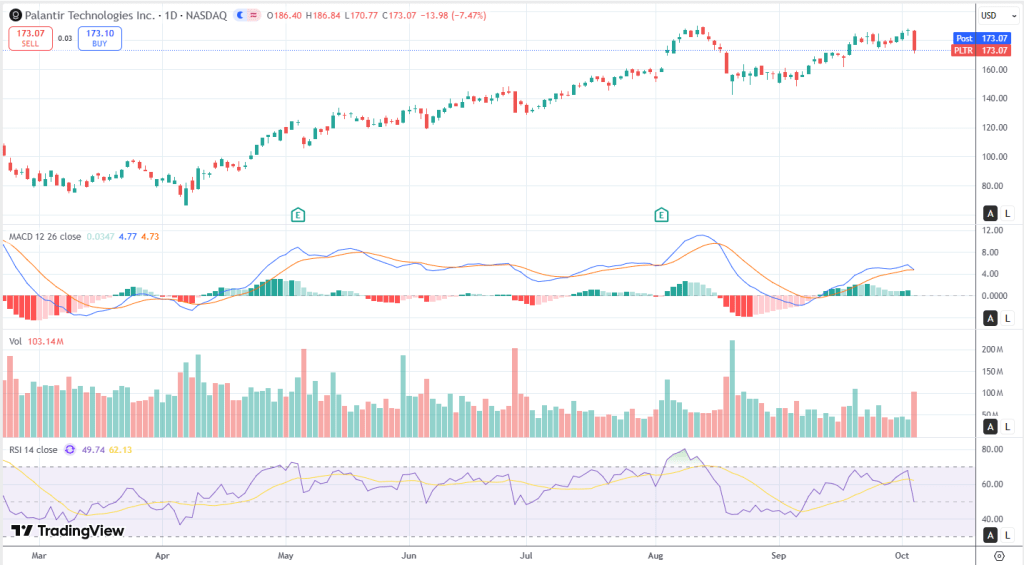

Reuters/Breaking Defense dropped a spicy one: an internal Army CTO memo flagged the NGC2 prototype (Anduril is prime; partners include Palantir, Microsoft, etc.) as “very high risk” with “fundamental security” issues. Think basic zero-trust fails: who sees what wasn’t locked down, user activity wasn’t logged, and third-party apps slipped in without full Army security assessments (one had 25 high-severity vulns; others had hundreds pending review). The market saw the headline and smacked PLTR ~5%, classic “shoot first, read later.”

Context matters though. This was a prototype doing the usual Pentagon dance: push fast, integrate a ton of stuff, then let the security reviewers unleash the RMF/ATO checklist from hell. The Army CIO basically said the process did what it’s supposed to do (find, triage, mitigate) and the division-level event still went forward. Palantir’s line: their platform is already cleared to operate at IL5/IL6, no Palantir-specific vulns were found, and they’re part of the tooling that gives the Army visibility to assess the broader NGC2 stack. Translation: this reads like program-level integration risk, not “Palantir’s core software is Swiss cheese.”

As an investor read, near-term it’s headline/sentiment risk and maybe some schedule friction as the program tightens access control, audit, and app-vetting gates. Check.

Medium term, this kind of scare usually becomes a scope expansion for whoever can actually deliver governance: attribute-based access, per-dataset policy, end-to-end logging, SBOMs, automated vuln management, and fast rollbacks. That’s very much in Palantir’s wheelhouse (Foundry/AIP for data governance + Apollo for deployment hygiene). If the Army doubles down on controls and observability, the “fix phase” can be a revenue driver for the folks who sell exactly that.

Bottom line: this looks less like a Palantir-specific failure and more like the inevitable security hardening chapter of an ambitious, multi-vendor prototype. The strategic tailwinds, Maven/Titan/JADC2-style budgets, aren’t going away, and higher security bars actually reinforce moats for integrators who can prove zero-trust at scale. Keep an eye on Army follow-ups, anything Anduril says about timelines, whether task orders keep flowing, and whether oversight turns this into theater or gives a quiet thumbs-up. If you’re long PLTR for “secure AI in production,” this is noisy, not thesis-breaking, and could even set up more work securing the stack. Not financial advice.

Leave a comment