This report merges fundamentals with price action in an attempt to be a clear signal at key moments. NFA

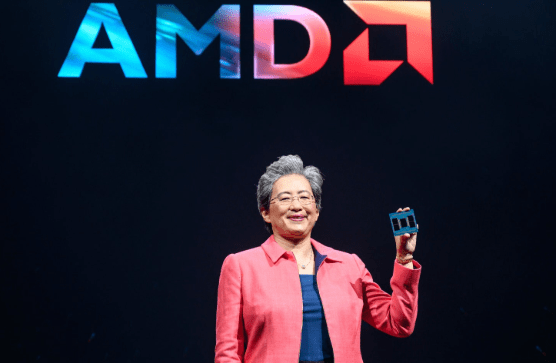

CES keynote

AMD just used the CES keynote to make a simple point: it’s no longer a parts vendor, it’s a platform company for AI at rack scale, client, and edge, and it now has credible customer pull to match. The star is Helios, an OCP-wide, double-wide rack reference built with AMD’s next-gen MI455 GPUs (2/3nm, HBM4, 3D chiplets) plus Venice EPYC (Zen 6, up to 256 cores) and Pensando networking. Architecturally, this matters because AMD isn’t just selling accelerators; it’s selling a turn-key, open, Ethernet-first system that can be replicated by OEMs/ODMs, exactly where the industry has been struggling to integrate at scale. If you were waiting for AMD’s “DGX moment,” this is it, only done on open standards (UALink over Ethernet, UEC).

Second, the demand validation didn’t come from slides—it came from customers on stage. OpenAI’s Greg Brockman basically said “we’re compute-constrained,” endorsed co-design on MI455, and described agentic workloads that balloon inference demand. Luma AI (video/multimodal) said ~60% of their inference already runs on AMD and they’ll 10x deployments; Fei-Fei Li’s World Labs showed spatial/3D models already optimized on MI325X and pushing to MI450. In healthcare, Illumina/AstraZeneca/Absci laid out production use where memory capacity, bandwidth and inference economics—AMD’s strong suits—are gating factors. This is the right kind of proof: real workloads, not just peak TFLOPS.

Third, AMD extended the roadmap credibility: MI455 this year; MI500 (CDNA 6, 2nm, HBM4E) in 2027, claiming a 1,000× four-year curve. On PCs, Ryzen AI 400 and Ryzen AI Max/Halo target local agents with unified memory and a beefy NPU/GPU; Liquid AI’s lightweight multimodal models give a plausible path to on-device AI that isn’t marketing vapor. That broadens TAM and mix, data center remains the profit engine, but AI PCs/edge add unit volume and stickier ecosystems.

Why it matters competitively: Nvidia still leads in frontier training and end-to-end software, but AMD is closing the system integration gap and leaning into openness. Helios plus ROCm day-0 support and rapid cadence lowers the infrastructure adoption barrier; sovereigns and enterprises that don’t want a closed fabric now have a clear alternative. If AMD can marry HBM4 supply (Samsung/Micron) with rack-level tokens-per-watt and tokens-per-dollar leadership on real models, the share narrative changes from “second source” to “second platform.”

What is the Price Action telling us?

https://www.tradingview.com/chart/AMD/XRzFTVXt-AMD-Range-Box-Compression-230-Breakout-Trigger/

AMD’s still trading a box between 200–205 and 228–230. We’re camped in the upper half (~223–224), which is where breakouts are born, or fail. The tell is acceptance above 228–230 on closes, not intraday wicks.

Levels that matter (with Fib confluence):

- Support ladder: 223/222.9 (61.8%) to 212.8 (78.6%) to 205/200 (range floor).

- Resistance ladder: 228–230 (box top & 50% retrace) to 237.1 (38.2%) to 245.8 (23.6%) to 260 (prior high).

- If 260 is reclaimed later, extensions line up at 276/297/320.

TPUs change the tape risk, not the thesis:

So far, I believe AMD’s price has been muted mainly because of Google’s TPUs hype. I believe Google does have a very good strategy for inference, but:

- Google’s TPUs are a credible in-house alternative that create pricing pressure on merchant GPUs and give hyperscalers bargaining leverage. Near-term that can inject headline volatility right at 228–230 (classic “sell-the-breakout” attempts).

- But TPUs are capacity, power, and portability-constrained and largely cloud-gated. The broader market still needs flexible, merchant, rack-scale GPUs. That dynamic actually helps AMD as the only open, Ethernet-first #2 platform (Helios/MI455, Venice EPYC, ROCm), while it caps NVDA’s pricing umbrella.

What confirms a real breakout (beyond price):

- Closes >230 on above-average volume plus improving AMD vs NVDA relative strength on your screen.

- Headlines that speak to merchant demand, not just TPU rhetoric: Helios/MI455 cluster awards, HBM4 allocation momentum, DOE/Genesis Lux/Discovery progress, and cadence in ROCm day-0 model support. Those turn 230 from a lid into a floor.

Bias & playbook (now incorporating TPU noise):

- Mildly bullish while above 223/222.9. We’re pressing the top rail; momentum’s trying to turn up.

- Above 230 (close/hold): bulls in control -> targets 237 → 246 → 260. Expect headline feints around TPUs; treat them as noise unless they come with clear signs of HBM diversion away from MI4xx or AMD guide cuts.

- Fail at 228–230 and lose 222.9: expect a range rotation to 212.8, possibly the 205/200 reload zone. That doesn’t break the secular story; it just resets the swing while the market digests TPU chatter.

Why I’m leaning long into the trigger:

The fundamental tape, OpenAI multi-GW pact, Helios rack-scale unveiling, ROCm cadence, DOE supercomputers, Sanmina NPI partnership, and HBM4 sourcing progress, argues AMD has graduated from chip vendor to platform supplier. TPUs may pressure pricing, but they also force multi-source strategies, and AMD is the merchant alternative customers need.

Bottom line:

Respect the box, but I’m a buyer of confirmation, adding on a daily close >230. If TPU headlines ambush the breakout, your line in the sand is 222.9; below that, let it rotate and reset at 212–205 while the long-term thesis stays intact.

Not Financial Advice

Leave a comment